Run Python Applications Efficiently With malloc_trim

Many large pieces of software - like the Instagram, YouTube, and Dropbox web applications - started off as monolith Python applications. It's extremely easy to build web applications with Python due to its ease of use and high quality open source libraries.

Motivation

Python comes with automatic memory management (with garbage collection), which frees developers from dealing with deallocating unused objects manually. This is extremely beneficial for delivering features faster, but subjects applications to the whims of the memory management system and the garbage collection algorithm. For example, GC pauses are notorious in other managed memory languages like Java.

Speed might not be a defining factor for Python applications, since it’s rarely used for performance sensitive applications, but automatic memory management has other subtler consequences. Standard stateless Python web servers (running on CPython) are often likely to be memory bound compared to CPU/IO bound. This is because each object is allocated on the heap, and large open source libraries like numpy tend to take up ~50mb just by being imported into the program. Therefore, the infrastructure cost of running the application is directly tied to its memory efficiency - and subsequently, its memory management. This is often true for other dynamic languages like PHP and Ruby as well.

One approach to run Python web servers more efficiently is to pack as many processes of the application onto a single host (or pod), then set up memory limits for each process (potentially via cgroups) so that it doesn’t hamper another process. When a process starts exceeding its limit, it gets OOM (out of memory) killed. Finally, if we can lower these limits, more processes can run on the same hardware. This also helps with the limitation around CPython’s Global Interpreter Lock (GIL) - by enabling concurrency via processes rather than threads.

There’s another hitch with long running applications - even without memory leaks, memory use by Python apps increases over process lifetime. Python’s memory allocator (pymalloc) allocates large arenas, and doesn’t necessarily return them to the OS even when underlying memory is freed up, due to fragmentation and free list growth. pymalloc uses malloc behind the scenes, and malloc/free doesn’t like returning memory to the kernel too often. OOM kills are then just a fact of life with long running Python applications.

Subsequently, the goals become two fold - to reduce peak memory use so that you can run as many processes as possible for the same hardware, and to reduce the frequency of OOM kills such that it doesn’t affect availability and operability of the application. This implies OOM kills should be jittered (all processes shouldn’t restart together), OOM kills happen at far enough intervals (an hour or more) so that debugging process state, like introspecting the application state should not be difficult, and pushes/restarts of the application do not require too much excessive capacity due to fear of simultaneous standard restarts and OOM kills.

malloc_trim

That’s where malloc_trim comes in. malloc_trim is a libc function that tells libc to release free memory back to the OS. This might make subsequent allocations slightly slower, but as mentioned earlier, this isn’t too much of a concern for non performance sensitive applications.

How do I use it?

malloc_trim is dead simple to set up. A simplified code example:

import ctypes

import os

import psutil

def trim_memory() -> int:

libc = ctypes.CDLL("libc.so.6")

return libc.malloc_trim(0)

def should_trim_memory() -> bool:

# check if we're close to our OOM limit

# through psutil

process = psutil.Process(os.getpid())

return process.memory_info().rss > MEMORY_THRESHOLD

def trim_loop() -> None:

while True:

time.sleep(jitter(30, 60)) # jitter between 30 and 60s

if not should_trim_memory():

continue

ret = trim_memory()

print("trim memory result: ", ret)

def main() -> None:

# run web server

thread = Thread(name="TrimThread", target=trim_loop)

thread.daemon = True

thread.start()Essentially, you run a thread in the background to periodically ask libc to clean up if approaching a threshold, like a periodic garbage collection loop.

Alternatives

An alternative approach is to switch to jemalloc, an alternative memory allocator that tries to reduce fragmentation. Others have seen significant benefits, and malloc_trim wouldn’t be required with jemalloc. But it has its own downsides, and doesn’t work in all cases.

There’s also several complementary approaches like __slots__ to reduce memory use for Python apps.

Credits

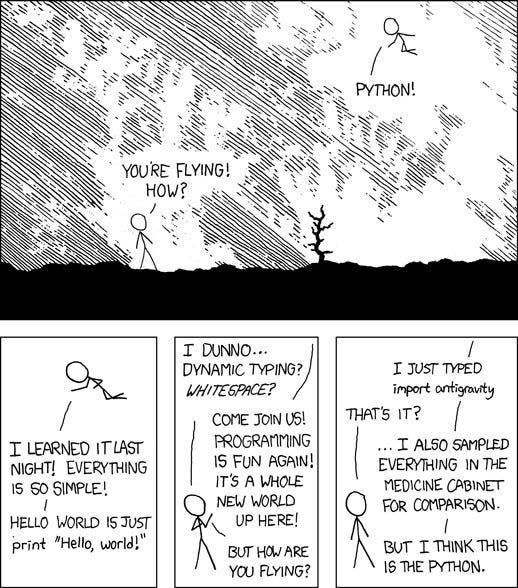

Credits go to Jukka Lehtosalo and Ivan Levkivskyi - who proposed the use of malloc_trim. And to XKCD for the image used above.

Have you measured any improvement by doing this?

jemalloc wouldn't help in the naive Python interpreter. pymalloc directly uses OS virtual memory allocation routines for its object heap, which is mmap on Linux.

I think given the nature of pymalloc (arenas and pools), it would be beneficial for CPython to provide visualization into fragmentation to understand if things like malloc_trim are actually useful (no doubt that for your workload you must have measured and seen improvements).

A moving GC is actually probably the ideal solution for Python but would be incompatible with CPython semantics (specifically that `id()` is often the pointer address in memory.)